- Connect

- Posts

- The Systems Strike Back: What Vibe Coders, Slack, and LLMs Miss

The Systems Strike Back: What Vibe Coders, Slack, and LLMs Miss

Vibe coding, an AI automation example, Slack is a canary in the (AI data) coal mine, and the latest AI Agent adoption numbers.

Hello! This week I’m diving into where we’re headed with "vibe coding", how Slack is a canary in the coal mine on apps tightening data access in an AI-driven world, some fresh insights into the adoption and pushback on AI agents.

But I wanna start sharing a bit of the work we’ve been doing lately as a bit of inspiration on possibilities with AI 👇

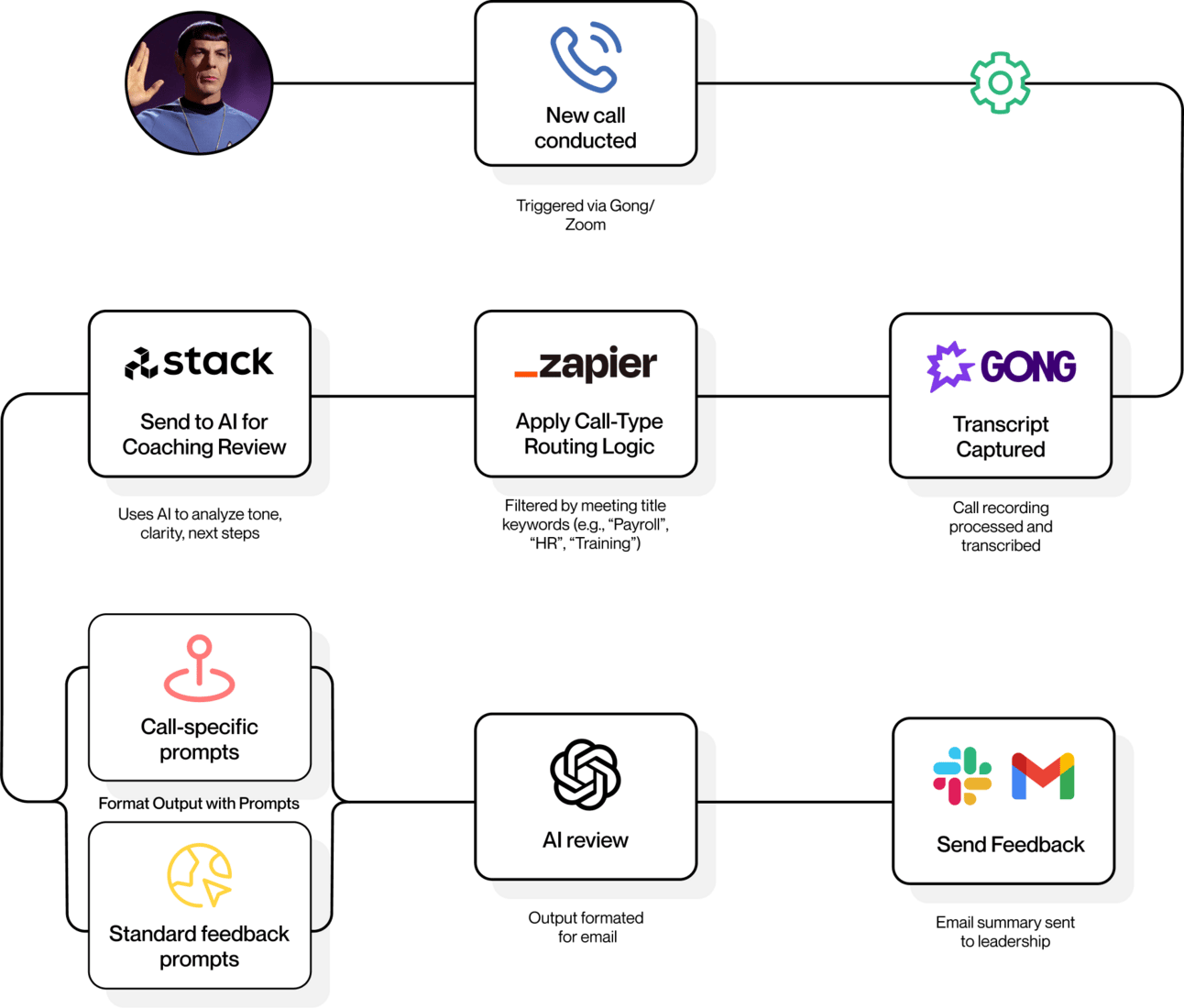

1. Automation to help scale Customer Success

The challenge:

A fast-growing SaaS team needed to scale onboarding—but their Customer Success team was drowning in calls. Feedback was late or missing. Coaching became guesswork. Quality slipped.

What we fixed:

We rebuilt the QA workflow from the ground up.

→ Calls are auto-transcribed

→ Routed to the right prompt

→ Reviewed by AI with human-in-the-loop checks

→ Summaries sent to CS leads—fast

AI System to Review Customer Success Calls at Scale.

The result:

250+ collective hours saved per month across Customer Success team

80%+ of calls reviewed by AI with fallbacks to humans when needed

Better coaching, better retention, happier customers

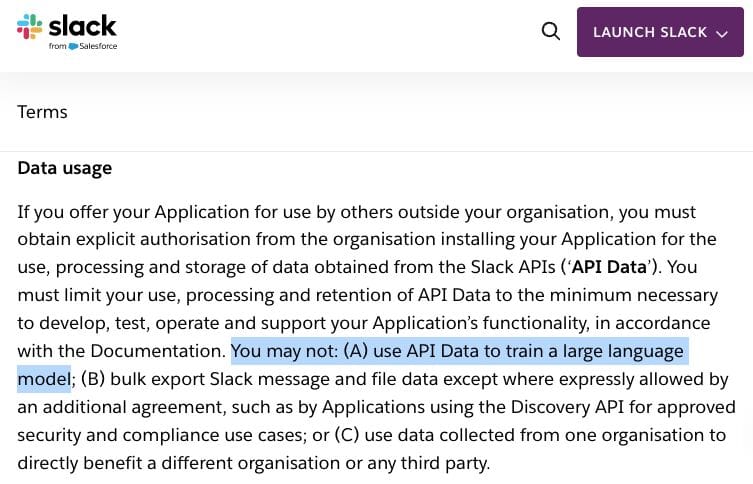

2. Slack to Your Data: “You Shall Not Pass”

What happened:

Slack and their owner, Salesforce, is limiting access to AI tools like Notion & Glean. These tools help teams search across platforms like Slack, email, and docs. But now, Slack appears to be blocking that data from external models so they can charge $10 for the same feature that is limited to just Slack AI.

Ooof

Why it matters:

Even if you’re not using Slack or other services that Slack is now blocking, it’s important to keep tabs on the trend of companies blocking LLM-powered search as I expect we’ll see more platforms unwilling to play nice with integrations.

This is why we tell so many clients of ours that owning as much of their data and direct integrations (versus “off the shelf” connections) are important.

The big picture: workplace content, your messages, docs, and knowledge, lives inside vendor ecosystems. If those systems don’t support external AI tooling, growing your internal, AI-powered intelligence stays stuck.

3. “Vibe Coding” Is Having a Moment

What’s happening:

We’ve all heard about “vibe coding” by now.

But if you’ve only heard about it at surface level, Wired profiled a rising trend inside engineering teams: shipping fast, skipping tests, and relying more on intuition than documentation.

Lovable, Cursor, and Replit are popular options. Windsurf was just bought by OpenAI for $3 billion.

Why it matters:

The shift is real and polarizing to many. Some teams say it keeps things moving. Others see it as a fast track to technical debt.

We’re building internal tools right now with tools like Lovable and Replit and as someone who’s worked with engineering and product teams for years, I will say that this feels like the future and it’ll become the norm within 18 months.

What we’re seeing:

🛠 Traditional Engineering & Product playbooks are disappearing fast

🏃 You can ship tools and proof of concepts at rapid speed

🧠 Shared knowledge is harder to scale

The debate isn’t about right or wrong. It’s about trade-offs: clarity vs. chaos, speed vs. structure. If everyone’s shipping, but no one’s documenting how it works, what happens when the team changes? But the big belief: will that really even matter in the next 12-18 months?

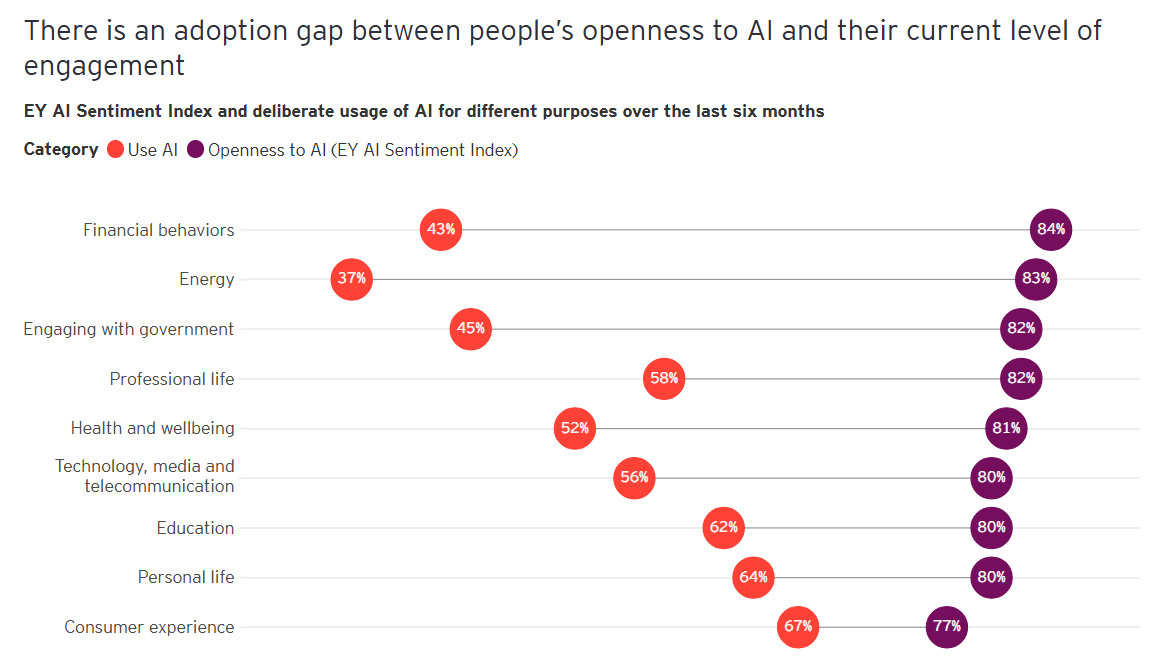

4. AI Agents Are Coming…Slowly

The signal:

Agentic AI, tools that can complete tasks without human oversight, is gaining traction. But there’s a catch: adoption is outpacing trust, and that gap is slowing real progress.

What the data shows:

📊 77% of workers want tools that reduce mental effort

📊 44% of CEOs say employees are hesitant to use AI

📉 Even in high-ROI sectors like finance, engagement is lagging behind interest

Openness isn’t the issue, engagement is. Most sectors still haven’t bridged the AI usage gap.

Where the hesitation starts:

It’s not just about capability, it’s about control. Understandably, the more decision-making power you give AI, the more people hesitate.

Why this matters:

Agentic tools already deliver value in finance, healthcare, and operations. But without trust, they won’t scale. Governance, transparency, and communication are now just as critical as model performance.

We’re building AI agents ourselves and approach it with 2 beliefs in mind that has been successful so far:

Use cases should be narrow so the inputs and outputs can be closely monitored and quality controlled

Agents shouldn’t be interacting with outside inputs or external-facing

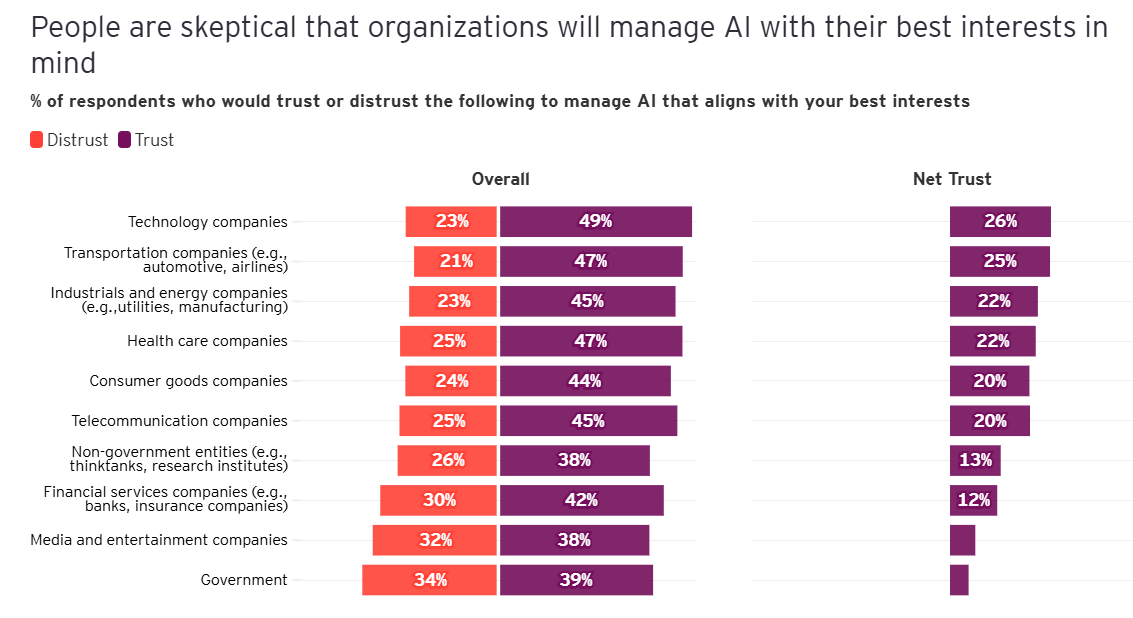

Skepticism is a barrier to agent adoption, not because of the AI itself, but the orgs using it.

📖 Post of the Week

The new normal 🙃

joined a call and it's just me and a dozen AIs

— Dani Grant (@thedanigrant)

11:42 PM • Jun 10, 2025

Chat soon 👋

PS, we’re busy but if you’re looking to start the fall with an AI strategy for your team, now’s the time to chat.

New here? Consider subscribing.